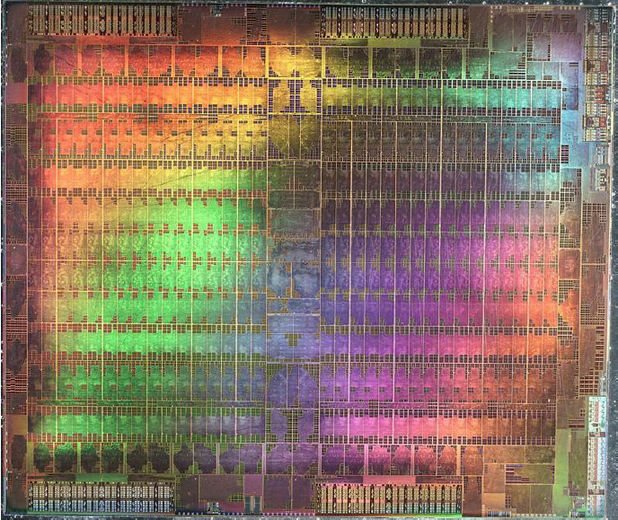

Fritzchens Fritz / Better Images of AI / GPU shot etched 3 / CC-BY 4.0

Hosted by

The Leverhulme Centre for the Future of Intelligence (LCFI), University of Cambridge in collaboration with The Centre for Science and Thought (CST), University of Bonn

Conference theme overview

The aim of this conference is to interrogate how an intercultural approach to ethics can inform the processes of conceiving, designing, and regulating artificial intelligence (AI).

Many guidelines and policy frameworks on responsible AI foreground values such as transparency, fairness, and justice, giving an appearance of consensus. However, this apparent consensus hides wide disagreements about the meanings of these concepts and may be omitting values that are central to cultures that have been less involved in developing these frameworks. For this reason, scholars and policymakers have increasingly started to voice the need to acknowledge these disagreements, foreground the plurality of visions for technological futures, and centre previously overlooked visions – as the necessary first steps in establishing shared ethical and regulatory frameworks for responsible AI.

While planetary-scale challenges demand international cooperation in search of new solutions – including those that rely on AI – to address the crises ahead of us, feminist, Indigenous, and decolonial scholars, among others, have pointed to potential problems arising from the techno-solutionism and technooptimism implied by the universalising ‘AI for Good’ paradigm. They recognise that some groups of humans have been multiply burdened under the current, dominant system of technology production, and that this system – if unchanged – is unlikely to bring about positive transformation. To ensure that new technologies are developed and deployed responsibly, we must, therefore, acknowledge and draw on ontological, epistemological and axiological differences, in ways that do not privilege a particular worldview. Yet in doing so, we must also work to avoid essentialising other nations or peoples, erasing extractive colonial histories, diversity washing, and cultural appropriation.

By foregrounding the many worlds of AI, we aim to create a space for dialogue between different worldviews without reifying the notion of discrete and unchanging cultural approaches to AI. Through centralising terms like ‘diaspora’, we aim to examine the complex (and often violent) histories of cultural exchange and the global movement of people and ideas which rarely take centre stage in conversations on intercultural AI ethics.

The question central to Many Worlds of AI is therefore: How can we acknowledge these complexities to facilitate intercultural dialogue in the field of AI ethics, and better respond to the opportunities and challenges posed by AI?

Many Worlds of AI is the inaugural conference in a series of biennial events organised as part of the ‘Desirable Digitalisation: Rethinking AI for Just and Sustainable Futures’ research programme. The ‘Desirable Digitalisation’ programme is a collaboration between the Universities of Cambridge and Bonn funded by Stiftung Mercator. The primary aim of the programme is to explore how to design AI and other digital technologies in a responsible way, prioritising the questions of social justice and environmental sustainability.

For more information, head to the project website.

This conference is generously funded by Stiftung Mercator.