Rapid review finds leading AI companies are not meeting UK Government best practice for frontier AI safety.

by: Seán Ó hÉigeartaigh, Yolanda Lannquist, Alexandru Marcoci, Jaime Sevilla, Mónica Alejandra Ulloa Ruiz, Yaqub Chaudhary, Tim Schreier, Zach Stein-Perlman and Jeffrey Ladish

Introduction

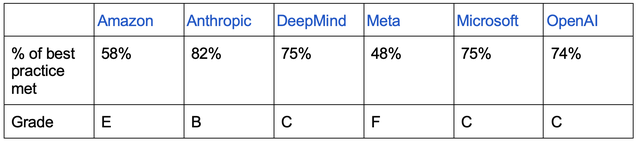

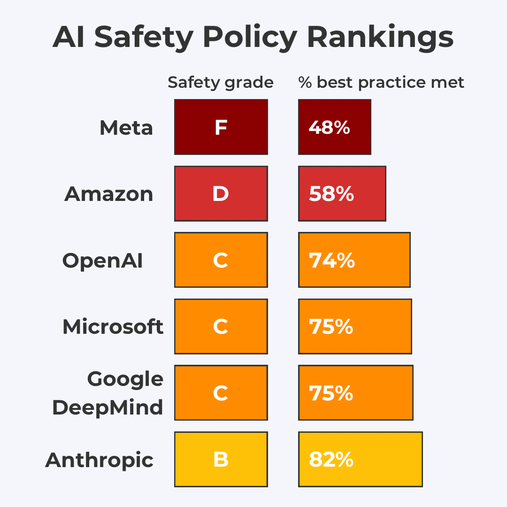

Are frontier AI companies meeting best practices for frontier AI safety? Ahead of the AI Safety Summit, on Friday 27th October, the UK government released “Emerging processes for frontier AI safety”, a detailed set of 42 best practice policies, across 9 categories, that frontier AI companies should be following. At the request of the government, the six leading AI companies also released their AI Safety Policies on the same day. The natural question, then, is whether the companies are meeting the government’s “best practices”.

We — a group of 15 AI academics, AI regulation experts, and technical AI researchers — have assessed the companies’ policies against the government’s best practice, and assigned each a score to see how they compare to the best practice, and to each other.

Our analysis finds that no company is meeting more than 82% of best practices, and several are falling significantly behind. We believe this comparison can be an important way to incentivize commitments and transparency, identify areas for improvement, and encourage a 'race to the top' on AI safety. We think the analysis shows the urgent need for further action on AI safety across all tech companies, and the need for regulation to ensure best practices.

In the rest of this piece we explain our methods, present our results, and discuss each company's score.

Methods

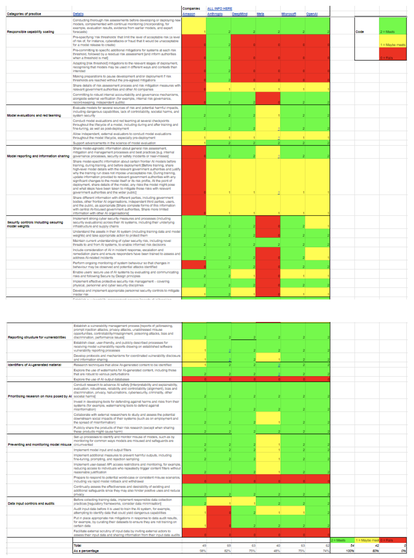

We created a spreadsheet to score how well each company is meeting each of the 42 policies laid out in "Emerging Processes for Frontier AI Safety". The spreadsheet is openly available here, and readers can put in different scores to see how that affects the overall company scores.

A group of 15 researchers in AI safety, governance, risk, foresight and forecasting (including all the co-authors of this report) assigned scores (0-2) to how clearly the companies’ Safety Policies aligned with the 42 best practices included in the government’s “Emerging Processes for Frontier AI Safety". We assigned 2 points for “Meets best practice”, 1 for “Maybe meets best practice”, and a 0 for “Fails to meet best practice”. This was then totalled — with a perfect 100% score being 84 (2 points for all 42 practices). We assigned overall scores of 90%-100% an A, 80-90% a B, 70-80% a C, 60-70% a D, 50-60% a E, and 0-50% an F.

Our methods were inspired by other expert elicitations1. It is helpful in these exercises to have diversity of expertise, independence in assigning scores, and opportunities for discussion. All scores were independently assigned and checked by at least two separate people. Our team of 15 people includes the following backgrounds/areas of expertise:

- Researchers from Oxford and Cambridge University

- AI alignment researcher(s)

- AI forecaster(s)

- AI regulation experts

- Language model security practitioner/researcher(s)

Our analysis was a rapid review, and we encourage more detailed analyses, and criticisms of our approach and results.

Results and Discussion

Image: Screenshot of the scoring spreadsheet.

Full spreadsheet available here.

Amazon - Score: 58%

Overall, Amazon’s response was quite short and less detailed than other companies. Amazon had a particularly strong section on the physical security measures it uses to protect its data centres.

However, it lost points in the ‘Model reporting and information sharing’ section as it does not mention risk management in its safety policy nor model cards, and does not consider sharing more information with governments nor commit to third party auditing.

Anthropic - Score: 82%

Anthropic is meeting many of the best practice policies (see Anthropic's response). It did particularly well in the ‘Responsible capability scaling’ section as the only company to have yet published a policy - its Responsible Scaling Policy (RSP).

However, it mainly lost points in the ‘Data input controls and audits’. It seems to have misunderstood the Government as referring only to user data, not training datasets in general. As such, it does not detail responsible data collection practices, auditing its datasets, or risk mitigations. On a minor note, Anthropic provides its responses across several webpages rather than one single page, which makes analysis harder.

Google DeepMind - Score: 75%

Google DeepMind scored fairly well across the board (see DeepMind's response). In particular, one notable novel policy is that teams wanting to use data for research (including pre-training and fine-tuning of frontier models) must submit a data ingestion request to a dedicated data team.

However, it mainly lost points in the ‘Responsible capability scaling’ section as it has not yet published its policy but is still “currently preparing our risk management for future generations of frontier models”.

Meta - Score: 48%

Meta (see Meta's response) received good scores in the ‘Prioritising research on risks posed by AI’ section.

However, it mainly lost points in the ‘Security controls including securing model weights’ and ‘Preventing and monitoring model misuse’ sections. This obviously touches on the emotive debate about open-sourcing frontier AI models, and the strong stance Meta has taken on one side of that debate. The Government report notes on open-source:

“Open-source access to low-risk models may enable greater understanding of AI system safety through greater public scrutiny and testing of AI systems. At the same time, developers of open source models typically have less oversight of downstream uses, meaning that many of the methods for preventing the misuse of higher-risk models addressed in this section are unavailable to frontier AI organisations releasing open-source models. Such organisations can still identify and mitigate model misuse to some extent, for example, by endeavouring to enforce open-source model licences. However, releasing models via APIs provides significantly more affordances for frontier AI organisations to address misuse as they maintain visibility on how models are being used, increased control over usage and safeguards, and the ability to update or roll back a model after deployment.”

Microsoft - Score: 75%

Microsoft is meeting many of the best practice policies (see Microsoft's response). In particular, its Security Controls are excellent, though they could demonstrate a greater commitment to securing model weights through mechanisms like multi-party authorization.

However, it mainly lost points in the ‘Responsible capability scaling’ section as it simply refers to OpenAI’s in-progress ‘Risk-Informed Development Policy’ (RDP).

OpenAI - Score: 74%

OpenAI is meeting many of the best practice policies (see OpenAI's response). In particular, its AI safety research is excellent.

However, it mainly lost points in the ‘Responsible capability scaling’ section as it has not yet published its ‘Risk-Informed Development Policy’ (RDP). OpenAI has announced a new team that will lead this work, but has not yet clarified whether it would have pre-specified risk thresholds during development, additional mitigations at each threshold, and preparations to pause. Also, its Security Controls were slightly less good than other labs.

General

There were interesting patterns across the companies as well. All the companies (except Anthropic) are yet to release their policy regarding Responsible capability scaling. These are important policies that are needed for transparency and assurance.

There were several best practices that none of the companies met, such as;

- ‘Facilitate external scrutiny of input data by inviting external actors to assess their input data and sharing information from their input data audits’.

- ‘Prepare to respond to potential worst-case or consistent misuse scenarios, including via rapid model rollback and withdrawal’

Furthermore, there were some that none of the companies did particularly well on, such as sharing information with government authorities in ‘Model reporting and information sharing’. These are clear areas for improvement.

On the other hand, there were some best practices that all of the companies met, such as:

- ‘Conduct research to advance AI safety’.

This could indicate that this best practice is written too broadly or vaguely, and should be tightened up and further specified in future publications of ‘Emerging processes’.

Conclusion

Our analysis finds that none of the six companies meet all the best practice policies. All companies need to do more -- publication of Responsible Capability Scaling policies is a particular priority. Anthropic performed best, but they also need to do more to meet best practice, such as sharing more information with external auditors and government authorities. Several companies are falling significantly behind, especially Meta and Amazon. Our analysis was a rapid review, and we encourage more detailed analyses, and criticisms of our approach and results.

Acknowledgements

We would like to thank Lennart Heim and Jonas Schuett for comments; and our anonymous scorers.

Footnotes

1See for example A practical guide to structured expert elicitation using the IDEA protocol; Investigate Discuss Estimate Aggregate for structured expert judgement; Superforecasting: The Art and Science of Prediction; The Value of Performance Weights and Discussion in Aggregated Expert Judgments; Predicting and reasoning about replicability using structured groups.