A major new 19-author report argues that governing computing power (‘compute’) can help AI governance be more effective and targeted

By Haydn Belfield

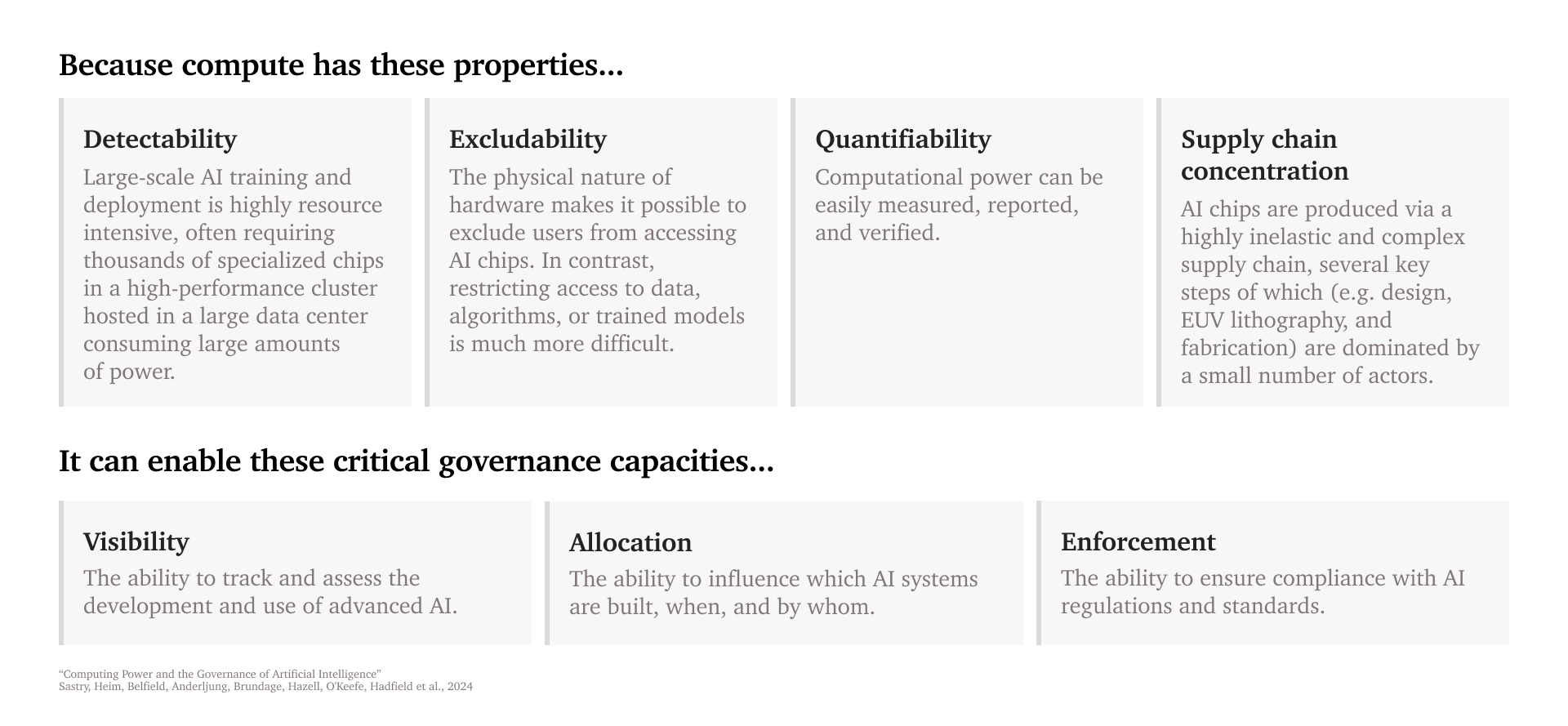

This diagram offers a summary of the main arguments of the report.

“Compute has properties that are unique among the various inputs to AI capabilities, and it is particularly important for governance of compute-intensive frontier AI models. Prominent AI governance proposals and practice in the past few years reflect this realization. With this paper, we hope to provide a better theoretical understanding of the promises and limitations of compute governance as a vehicle for AI governance, and spur more creative thinking on the future of compute governance” says newly-published report

Nineteen experts in the policy, law and practice of machine learning have jointly authored a major new report arguing that governing AI hardware, chips and data centres can play an important role in governing AI development and deployment.

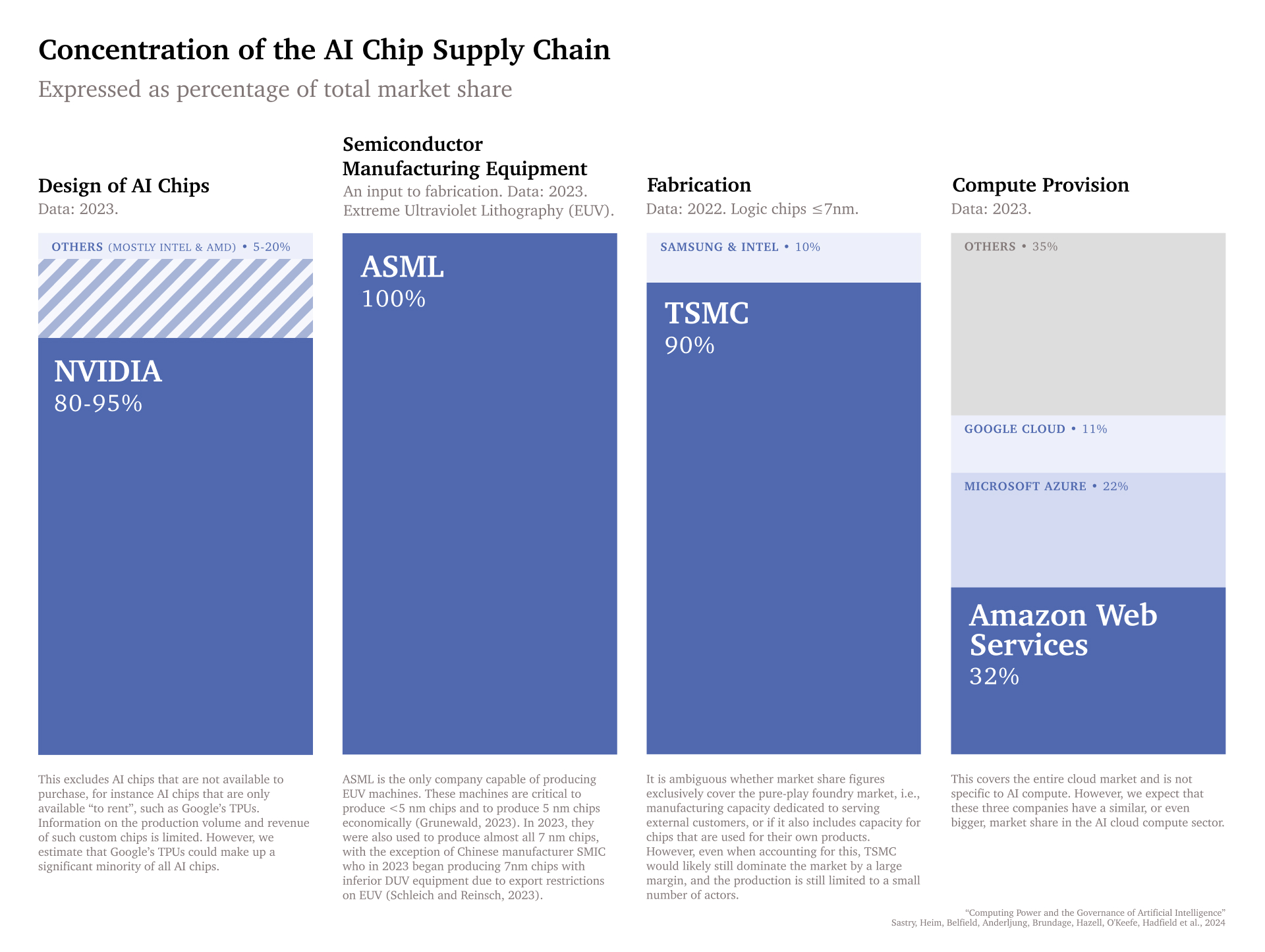

Government efforts across the world over the past year - including the US Executive Order on AI, EU AI Act, China’s Generative AI Regulation, and the UK’s AI Safety Institute - have begun to introduce a focus on compute into AI governance. The authors analyse the technical, economic and industrial aspects of compute to argue that large-scale compute has four advantages for governance: compute is detectable, excludable, quantifiable and its supply chain is highly concentrated.

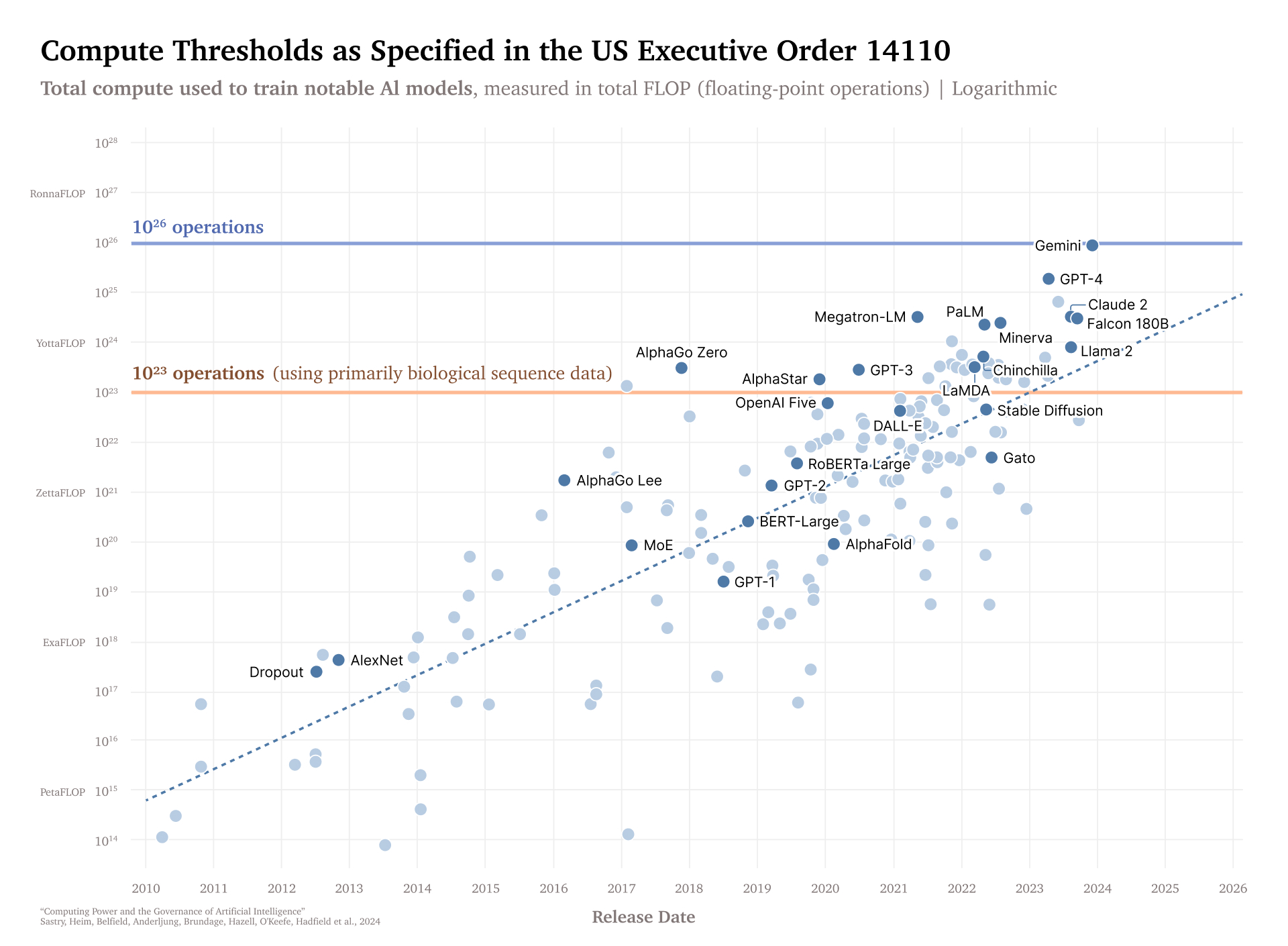

Compute thresholds set in the Executive Order: only AI systems trained above these thresholds face further scrutiny. The EU AI Act sets similar thresholds. Compute can be a useful proxy to help determine which AI systems should face extra ex-ante evaluations and testing.

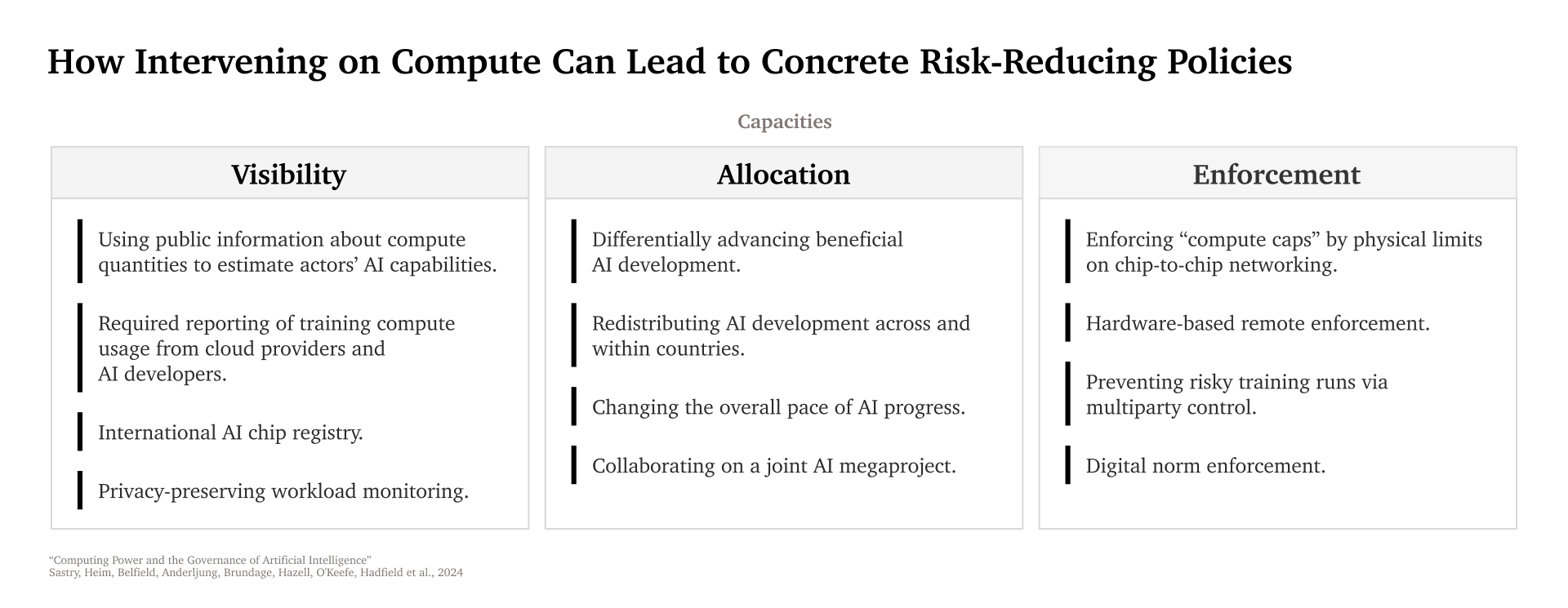

The report - Computing Power and the Governance of Artificial Intelligence - suggests that compute can be leveraged to support societal oversight of AI:

- An international AI chip registry, reporting training compute usage, and privacy-preserving workload monitoring can give regulators and governments more visibility into the opaque process of AI development.

- Through the allocation of compute to different organisations and projects through grants and investment in publicly-owned data centres, funders can advance beneficial AI development.

- New technical developments on the chips themselves such as physical limits on chip-chip networking, remote enforcement and multiparty controls on risky training runs could make enforcement less burdensome and more targeted.

A summary of the 12 interventions the report explores.

The co-authors come from a range of organisations including Cambridge University’s Leverhulme Centre for the Future of Intelligence, Centre for the Study of Existential Risk and Bennett Institute, MILA, the Harvard Kennedy School, the company OpenAI, and research organisations like GovAI and LawAI.

The authors do not presume for this report to be the ‘final word’ but rather instead hope to call further academic and policy-maker attention to compute governance. Moreover, the 100-page report does not present compute governance as a silver bullet to all issues in AI governance, but also discusses the risks of compute governance and possible mitigations. For example, if poorly designed and implemented, compute governance could raise concerns over privacy infringements or concentration of power. The authors therefore suggest five principles to mitigate these risks:

- Exclude small-scale AI compute and non-AI compute from governance

- Implement privacy-preserving practices and technologies

- Focus compute-based controls where ex ante measures are justified

- Periodically revisit controlled computing technologies

- Implement all controls with substantive and procedural safeguards

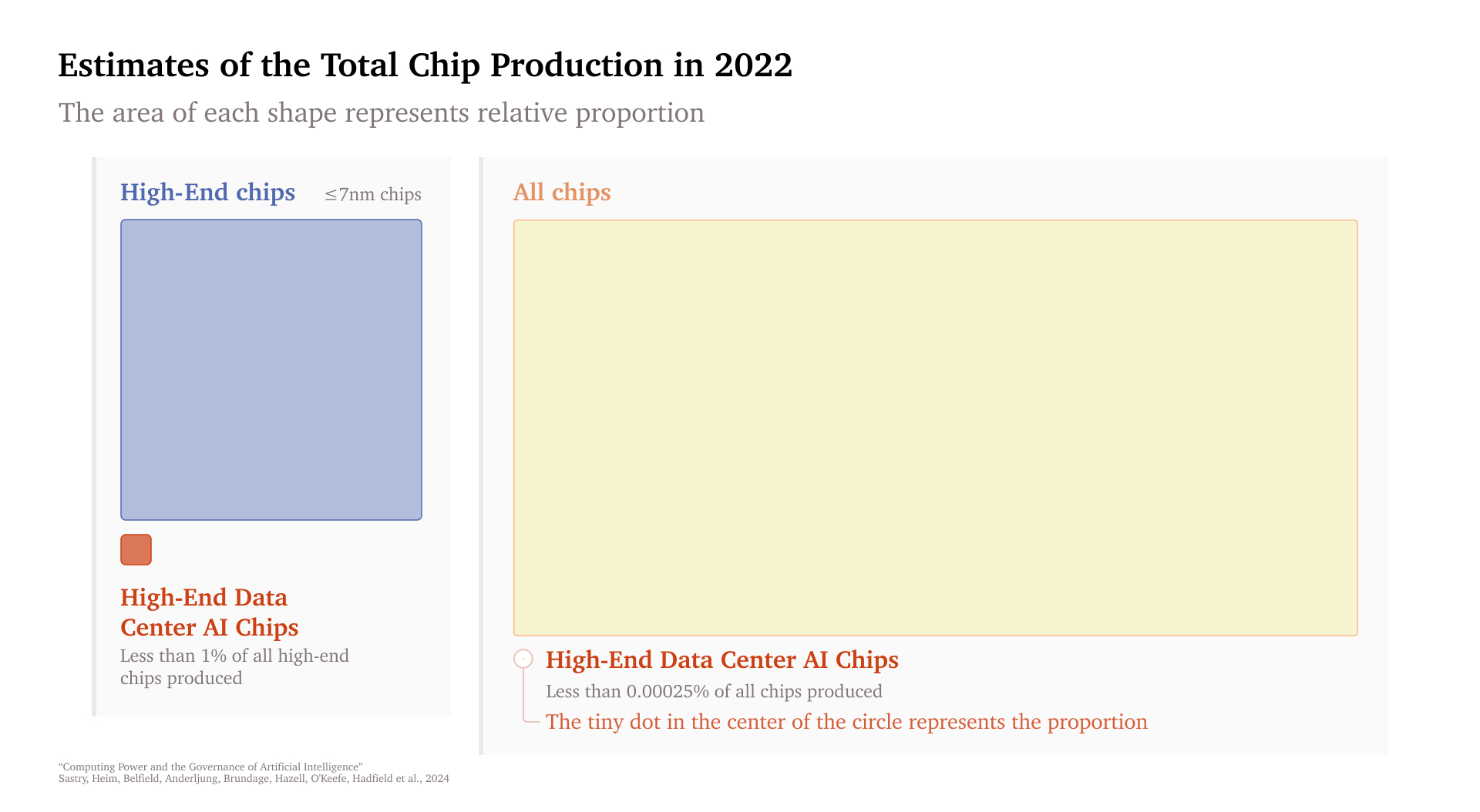

The focus of this report is high-end data centre AI chips. This is industrial scale compute, using advanced AI chips in massive football-field-sized data centres consuming large amounts of cooling and electricity equivalent of a small town. This is quite different from small-scale or personal use, like the GPU in a PS5 or a small academic cluster. Production of high-end data centre AI chips is a tiny part of overall production.

Data-centre AI chips are a minor segment of overall and high-end chip production. For 2022, the report estimates that the number of high-end data centre AI chips constituted less than 1% of all high-end (≤7 nm) chips and less than 1 in 400,000 (0.00026%) of every chip produced.

The compute supply chain is highly complex and specialised. It is a worldwide technical and industrial achievement, comparable to massive energy infrastructure, container ships and ports, or the Earth’s web of satellites.

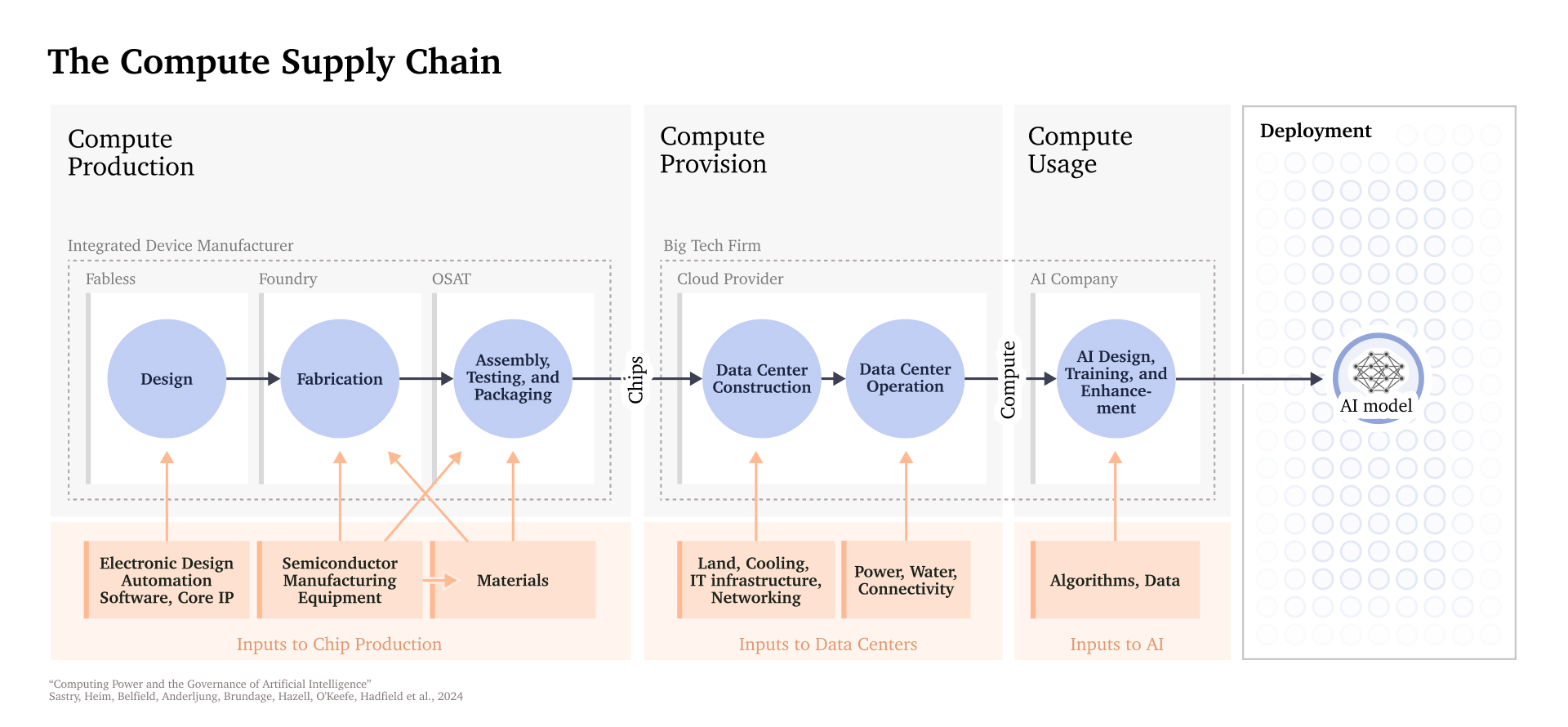

An overview of the AI compute supply chain. First, chips are produced through a process of design, fabrication, and testing. They are then distributed and accumulated in data centres. Compute users—such as AI developers—can then train and run AI systems from these AI supercomputer.

This supply chain is so complex and costly that it is also remarkably concentrated in just a few companies. This could make governance easier, as it may be easier to reach agreements with and establish oversight of only a handful of companies.

The supply chain for AI chips is highly concentrated. Several critical steps—including AI chip design and production—have fewer than three suppliers. Even AI development at the frontier consists of only tens of organisations. These facts enhance the governability of the compute supply chain–and how difficult it is to compete at the cutting edge of chip production.

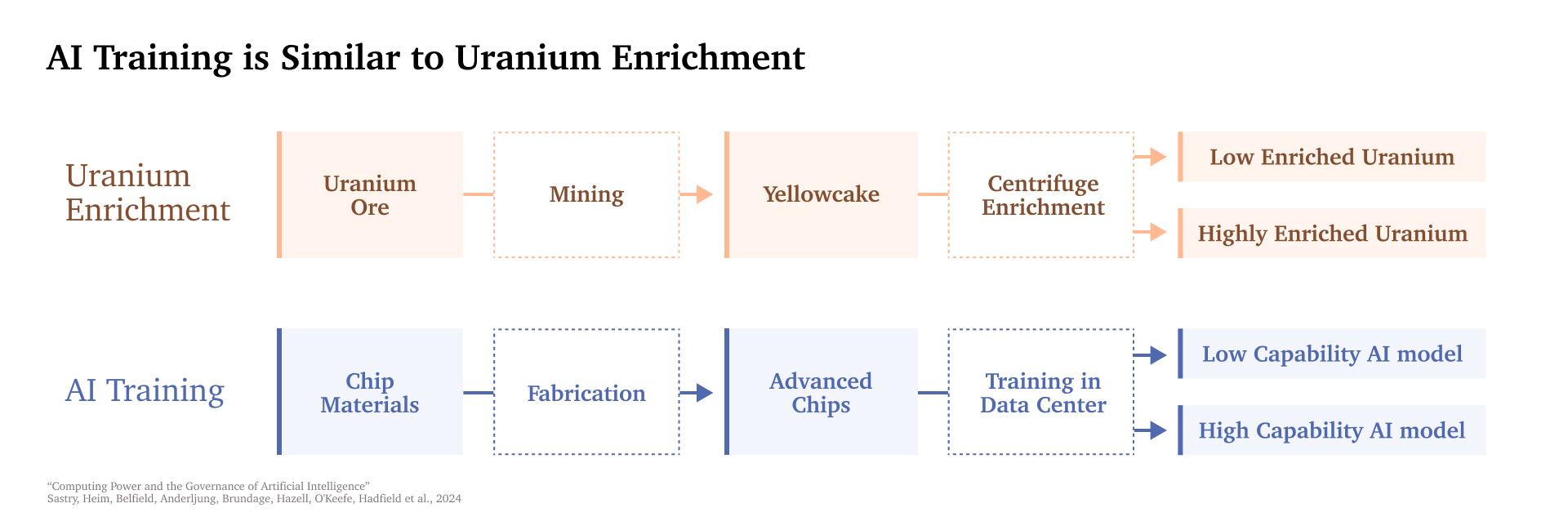

One suggestive analogy is between AI training and uranium enrichment - for each there is a key input which goes through a process that is lengthy, difficult, expensive, and potentially amenable to monitoring. Indeed, there are 6 to 59 manufacturers for each of the dual-use products in the Nuclear Suppliers Group, but some compute supply chain steps only have 1 to 3 companies.

The analogy between uranium enrichment and AI training. For both AI (chips) and nuclear energy (uranium), there is a key input that is difficult to produce and potentially regulable.

Computing Power and the Governance of Artificial Intelligence is available to download and read here: https://www.cser.ac.uk/media/uploads/files/Computing-Power-and-the-Governance-of-AI.pdf