EU AI Act for High Risk AI in a Nutshell

Article Written by Yulu Pi, A CFI Research Assistant on our EU AI Act Toolkit project and PhD candidate at the University of Warwick.

It is a done deal: the days of the AI industry regulating itself are over. With the EU lawmakers striking a deal over the AI Act and setting up the AI Office within the EU Commission to ensure it’s enforced, it's a clear alert for industries involved in AI development and deployment that they need to watch out for the regulation. Mitigating the risks of your AI systems isn't just a moral choice anymore—it's a legal obligation.

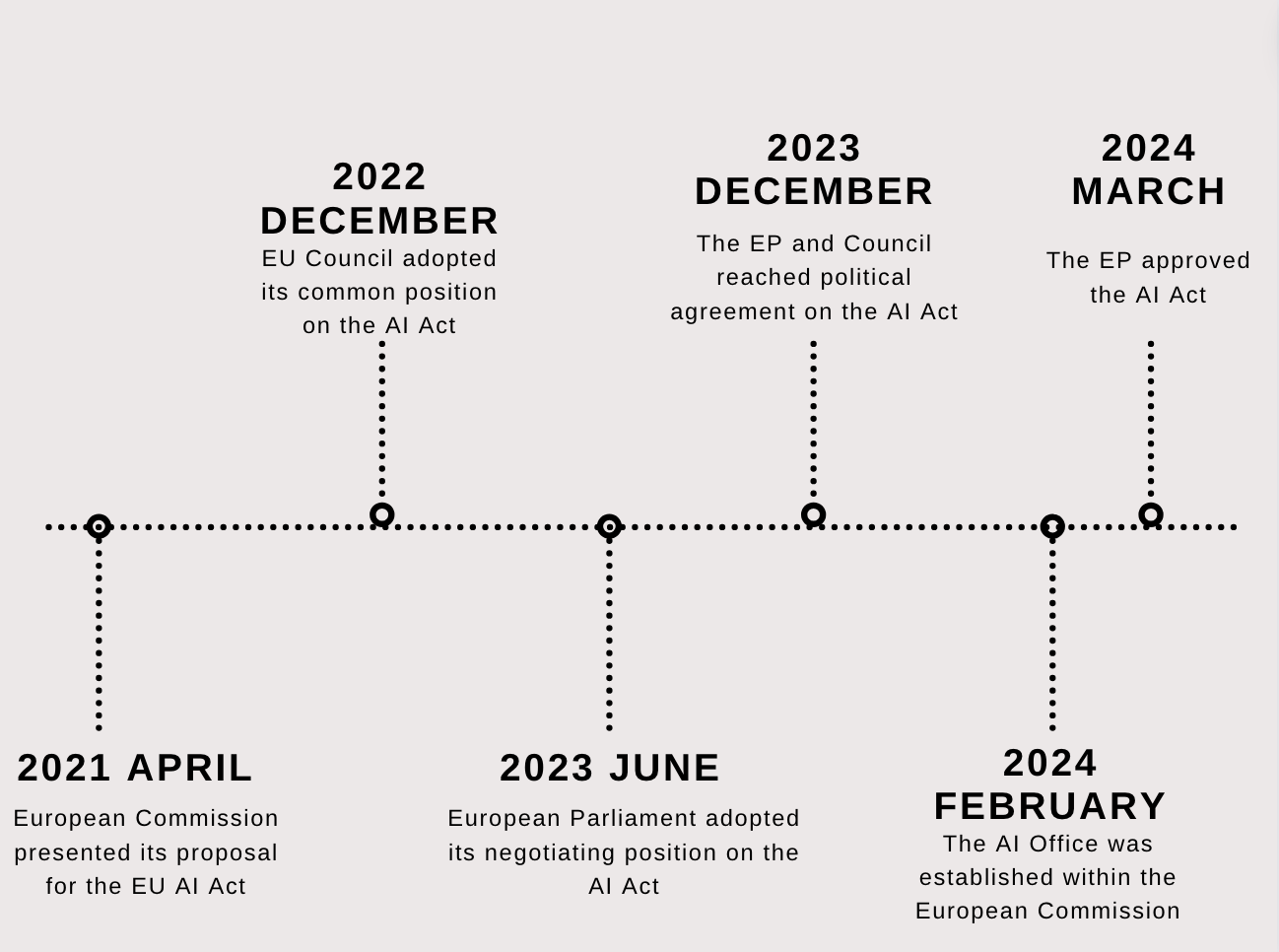

Image Caption: A brief overview of the legislation journey and timeline leading to the AI Act.

How does the AI Act regulate AI?

The EU AI Act, the world’s first comprehensive AI law, categorizes AI systems into different risk tiers based on their potential risks and level of impact, each with its own set of rules to ensure safety and responsibility. Eight specific AI practices will be strictly prohibited, including the use of emotion recognition in workplace and educational settings, social scoring systems, predictive policing, and AI designed to manipulate human behavior or exploit vulnerabilities. While most AI encountered in our daily lives, like recommender systems and spam filters, will get a free pass, high-risk AI will face stringent rules to mitigate risks.

As the AI Act is set to take effect this May, let's break down what those rules entail for governing high-risk AI.

Does your AI fall into the high-risk category?

The Act defines high-risk AI as systems with the potential to cause significant harm to EU citizens or the environment. Examples include AI used in critical infrastructure, transportation, and healthcare, and decision-making processes with legal implications, such as credit scoring or hiring decisions (see the full list in Annex III of the EU AI Act). You can also check if your AI falls into this category using a free online tool.

What are the obligations of high-risk AI providers?

Let’s consider a high-risk scenario: using AI to accept or reject a credit application.

Imagine you've just applied for a mortgage to purchase a new house. Your bank informs you that they have trained an AI model to evaluate your application. As the high risk AI provider, what does the bank need to do to comply with the EU AI Act?

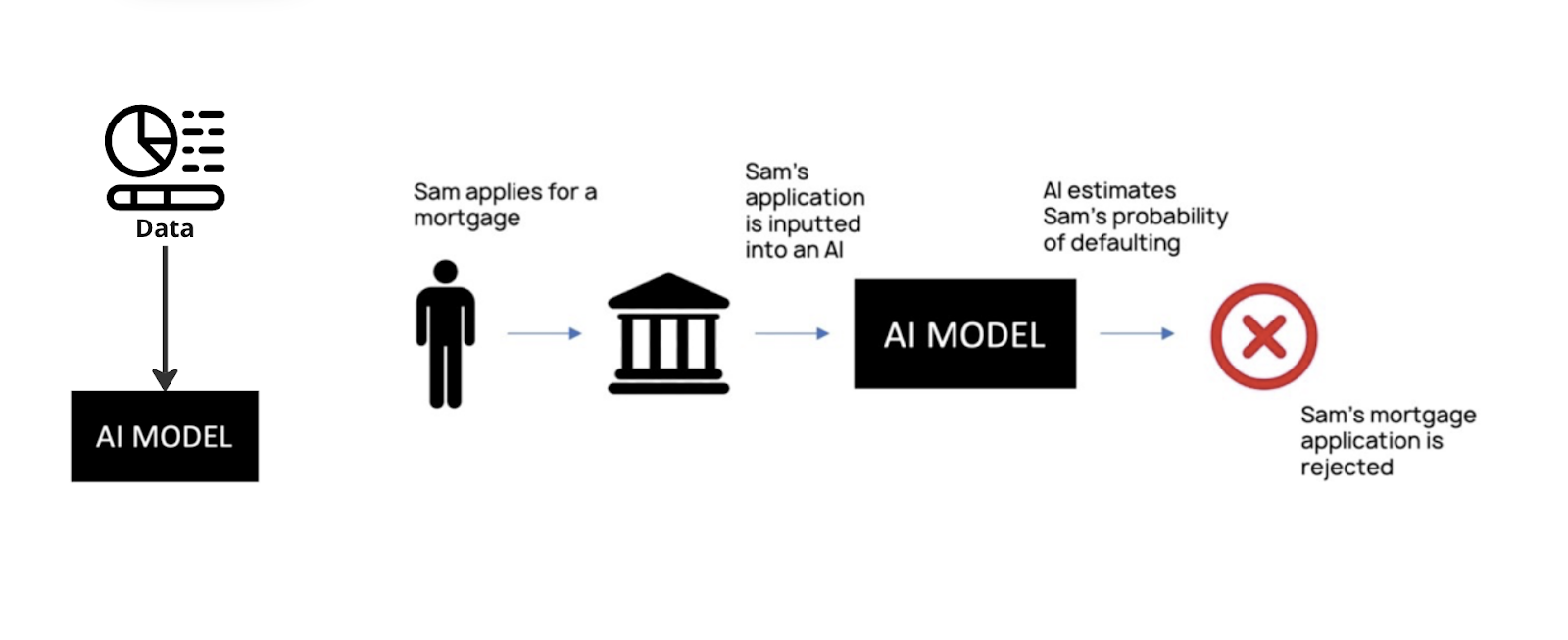

Image Caption: A speculative scenario where an AI model rejects a mortgage application.

In this simplified scenario, the bank both develops and deploys the AI system. But in real life, things can get more complicated. Banks might also buy AI systems from other providers, which means they're just using them, not making them. This adds another layer of complexity to the requirements below.

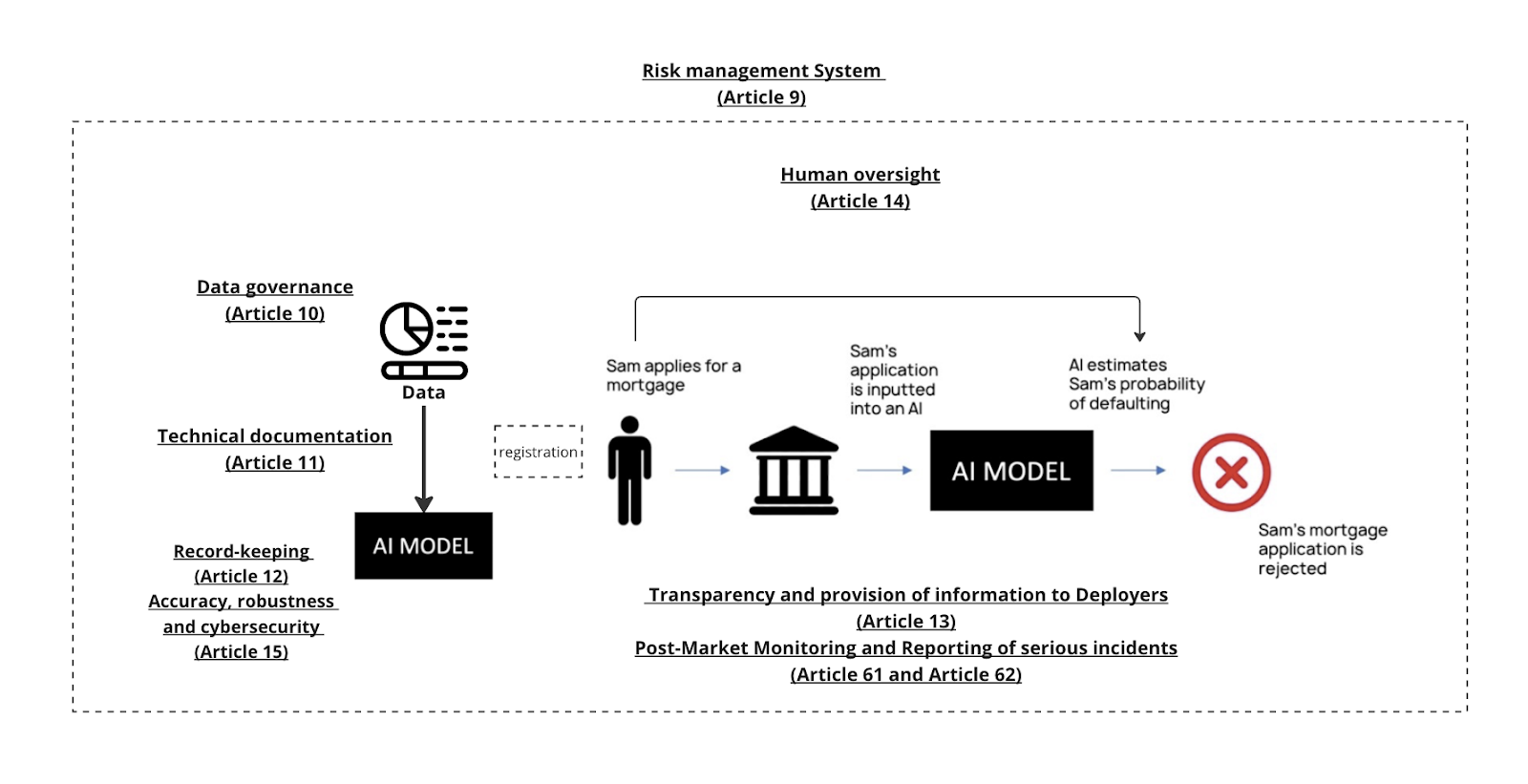

Data and Data Governance (Article 10): The bank must ensure that data used for training, testing, and validation undergoes data governance practices to guarantee relevance, representativeness, accuracy, and completeness. This means they have to check if the data is available and suitable for their needs before they use it. They also need to keep track of how they collect and process the data, and if they find any gaps and shortcomings of the data, they have to fix them properly.

Technical Documentation (Article 11): Similar to the documentation requirements for data governance, detailed documentation is necessary for various stages of AI model development, deployment, and monitoring. The bank must maintain detailed records outlining the AI model's type, logic, performance metrics, and crucial design choices (with minimal information as outlined in Annex IV). This documentation plays a vital role in ensuring regulatory compliance and facilitates audit and assessment by regulatory authorities.

Record-Keeping (Article 12): The bank must make sure the AI system can automatically keep records throughout its life. They need to log things like when the system was used, what data was inputted, and who checked the results.

Transparency and Provision of Information to Deployers (Article 13): The bank must design and develop the AI system in a way that its operation is transparent enough for deployers (in this case, the banks’ staff) to interpret the system's output and use it appropriately.

Human Oversight (Article 14): The bank must implement proper human oversight throughout the process of using AI to decide people’s credit applications. Involved human reviewers need to be able to understand how the AI system works, what it can and can't do, and step in if something goes wrong.

Accuracy, Robustness, and Cybersecurity (Article 15): The bank must put in place and keep track of efforts employed to ensure the AI system reaches an appropriate level of accuracy, robustness, and cybersecurity. The Bank should also ensure that these measures work well over the system's entire life.

Post-Market Monitoring and Reporting of Serious Incidents (Article 61 and Article 62): If something goes seriously wrong with the AI system, the bank needs to report it to the relevant authorities. They also need to monitor the system's performance and identify any issues that need fixing.

Risk Management System (Article 9): The bank must integrate its risk management process for the AI model with its existing risk management procedures, ensuring thorough evaluation and mitigation of potential risks associated with using AI in credit applications.

Image Caption: A Visual Guide to High Risk AI Requirements under the AI Act for the speculative scenario of a Bank using AI to decide if a mortgage application is successful.

Does the AI Act grant me any rights if I am affected by AI?

You might have noticed that all these requirements are directed at those developing and deploying AI. However, if you find yourself adversely affected by AI, you might wonder about your rights. For instance, your credit application could be unfairly denied due to errors or biases in the AI system.

The AI Act has sparked considerable debate about its lack of focus on protecting individual rights. Initially, when the EU Commission introduced the draft of the AI Act in April 2021, worries arose about how well it would safeguard human rights, mainly due to the lack of a robust complaint and redress mechanism. However, the approved version now grants individuals in the EU the right to complain if they believe an AI has caused them harm, and they can also receive explanations about why the AI made certain decisions.

It’s an important first step in giving people more control in a world that's becoming more automated. However, there are still concerns that the AI Act, by failing to grant people the right to redress, leaves individuals affected by AI powerless to seek redress or rectify erroneous AI decisions.

Keep an eye out for our AI Act Toolkit, coming soon.

Complying with the AI Act can be daunting for providers of high-risk AI, as it requires extensive documentation, including a risk management system, data governance, and a declaration of conformity.

Our AI Act Toolkit project is developing a step-by-step pro-justice compliance tool for developers of high-risk AI. Our tool will assist anyone involved in complying with the AI Act, streamlining the process of conducting mandatory internal control and documentation while also providing crucial guidance on ethical and social considerations related to their products. This tool integrates and reorganizes the requirements of the AI Act into step-by-step instructions and goes beyond mere legal compliance. It prompts AI development teams to think critically about how AI relates to structural inequality and engage meaningfully with the Act. Best of all, it's going to be free and available online this summer. Stay tuned!

CFI Blog Series curated by Dr Aisha Sobey.